GPU meets PCIe-based hard drives: Seagate and Nvidia demo NVMe HDDs

But when will they be available?

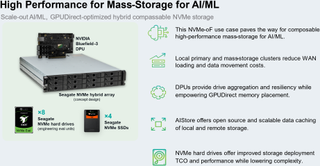

Hard drives will remain the most cost-effective storage solution for data centers for years to come, but to make them more suitable for AI data centers, Seagate is developing HDDs that use a common PCIe interface and the NVMe 2.0 protocol. At GTC, Seagate demonstrated a proof-of-concept system running NVMe HDDs, NVMe SSDs, Nvidia's BlueField 3 DPU, and AIStore software to show how NVMe transforms hard drives for AI workloads. Seagate is ahead of its rivals, which are also working on NVMe HDDs.

New protocol, new performance

Hard disk drives have traditionally used specialized interfaces, such as SCSI, Parallel ATA, Serial ATA (SATA), and SATA, which are fine but are reaching their limits for modern, high-performance data environments, specifically in AI and large-scale data centers. Both SATA and SAS rely on serialized protocols developed in the 1980s that carry legacy protocol layers not suited for modern high-speed data processing. Also, SAS and SATA setups require host bus adapters and additional controller layers, adding complexity, potential points of failure, and latency. As a result, these architectures are not suitable for AI workloads, which require high-throughput, low-latency access to massive datasets.

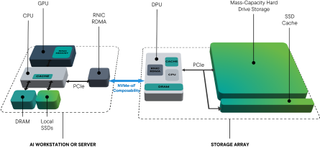

Compared to SAS/SATA, NVMe paired with PCIe offers significantly higher bandwidth, lower latency, and better scalability. Unlike SAS/SATA, which are limited to 6-12 Gbps speeds and rely on complex layers like HBAs and expanders, NVMe operates over an industry-standard PCIe interface, supporting speeds up to 128 GB/s (one HDD is not going to need more than 1 TB/s for quite a while, but on a system level, the more bandwidth, the merrier), and greatly reducing system complexity as well as simplifying scalability. NVMe also enables direct GPU-to-storage (GPUDirect) access through DPUs bypassing CPUs (thus reducing CPU bottlenecks), and supports 64K queues with 64K commands per queue, vastly improving parallel processing, which is important for AI systems.

Proof-of-concept system

To validate this architecture, Seagate built a proof-of-concept system integrating eight NVMe HDDs, four NVMe SSDs for caching, an Nvidia Bluefield 3 DPU, and AIStore software, all running in a Seagate NVMe hybrid array enclosure.

The test machine demonstrated that direct GPU-to-storage access minimizes latency in AI workflows. Also, removing legacy SAS/SATA infrastructure simplified system architecture and increased storage efficiency. AIStore software dynamically optimizes data caching and tiering, which greatly enhances AI model training performance.

Also, Seagate says that the system can scale to exabyte levels when using NVMe-over-Fabric (NVMe-oF). Specifically, NVMe-oF integration enables seamless expansion of multi-rack AI storage clusters, which is crucial to scale efficiently (perhaps more importantly, seamlessly) across large data centers.

The trial confirmed that NVMe HDDs could support high-performance AI environments without requiring fully flash-based storage solutions, reducing costs while maintaining performance.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

AI systems are driving exponential growth in data storage needs, with Existing architectures facing significant constraints. SSDs deliver high-speed performance but are financially unsustainable for long-term storage at this scale. SAS and SATA HDDs offer affordability but introduce complexity and latency due to reliance on host bus adapters (HBAs), proprietary silicon, and controller systems not optimized for AI’s high-throughput, low-latency requirements. Cloud storage options further complicate infrastructure with high WAN data transfer costs, unpredictable retrieval times, and latency spikes, which hinder AI processing efficiency. These limitations result in complex, costly, and inefficient architectures, slowing down AI adoption and performance.

Future AI storage needs

Nowadays, enterprises manage petabyte to exabyte-scale datasets for AI model training and inference. Going forward, their needs will increase, and this is where Seagate's solution to bring NVMe connectivity to HDDs and create a unified and efficient data center storage platform will shine.

From an HDD complexity point of view, adding NVMe to hard drives is not that expensive as the HDDs retain SAS/SATA physical connectors and their traditional 3.5-inch form factor. The only things that change are the addition of the NVMe protocol support and a PCIe interface to the controller (which probably costs pennies), as well as the development of firmware that supports NVMe features and capabilities like GPUDirect. Keeping in mind that the transition to NVMe/PCIe connectivity eliminates HBAs and complexity, small HDD cost price hikes will hardly be noticed by the industry.

However, as HDDs gain capacity, their IOPS-per-TB performance drops, and this may affect performance when working in AI clusters going forward (or will require more flash to mitigate). To that end, it is possible that in the future, dual-actuator HDDs, such as Seagate's Mach.2, will be preferable for AI clusters. Such drives are, of course, more expensive than regular single-actuator HDDs, but each such drive still costs less than two single-actuator HDDs, so this will not create any significant disruptions.

When?

One of the things that an avid reader would ask after learning the benefits of NVMe for HDDs is when such hard drives are set to hit the market. Unfortunately, it is hard to tell. Large companies prefer to have dual source supply for products like HDDs, which is why NVMe hard drives were developed as part of the OCP project. However, while Seagate already has NVMe hard drives, its rivals yet have to introduce their devices. When that happens, and all makers of hard drives can produce such products in volume, cloud service providers that address AI companies and workloads will start to adopt such products.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Jame5 Yes, lets replace the 64TB, 128TB and upcoming 256TB class SSDs that are 2.5" format or smaller with... 3.5" drives that top out at 30TB of storage capacity and ultimately use more power per byte.Reply

I'm sure a ton of AI cluster designers will opt for that solution. -

drtweak "Serial ATA (SATA), and SATA, "Reply

A little redundant there? lol

Also it says that it will make the drives faster? I would assume in random reads and writes? I mean what Spinner can max out a single SATA 3 (6Gbps) lane anyways? Maybe give them some more cashe or DRAM maybe?

I mean don't get me wrong, i think it is cool, just we already have controllers that can user NVMe, SAS, SATA all using the SAS connector. I would assume the bottle neck though would be the controllers connection to the rest of the board.